A key value of AI for businesses will be being able to ask your company’s database complex business questions in plain English and getting accurate, detailed answers within seconds. Thanks to advances in natural language processing, this long-held dream of natural language interfaces is rapidly becoming a reality.

However, as the complexity of real-world enterprise data and business questions grows, shallow reasoning AI agents are hitting their limitations.

The Growing Challenge for Data Analysts

Data analysts working with massive enterprise SQL databases—whether in Google BigQuery, Snowflake, or other data warehouses—are facing a problem: Business questions are becoming more layered and complex. AI Analytics Agents – those natural language interfaces that convert questions into database queries – often misinterpret the business questions that matter most. This leads to misleading or partial answers and missed opportunities.

Before even writing a query, analysts must:

- Interpret intent and determine output format

- Decide if a question requires a single query or multiple sequential ones.

- Understand slicing, joining, and chaining requirements.

Reasoning Gap in Current Text-to-SQL

If you've ever tried using ChatGPT (with limited custom-context) or other shallow-reasoning AI agents to generate SQL for complex business questions, you've likely experienced the frustration of receiving technically correct but analytically incomplete results. Analysts waste hours manually stitching together what an AI agent should have done seamlessly. As a result, Business decisions become slower, riskier, and less data-driven.

This isn't just an annoyance—it represents a fundamental limitation in how most AI agents process multi-layered reasoning tasks.

The problem? Most AI agents may fail miserably at handling complex questions. When confronted with multi-intent or multi-step questions, they:

- Break questions into rigid, disconnected parts, losing critical dependencies

- Misses contextual nuances, leading to misleading or partial insights

- Struggle with multi-intent logic, often returning simplistic or incorrect results

This article explores the root causes of these NLP analytics failures and how a new Intent Graph Agent architecture can overcome them to realize the next-gen analytics tools that deliver on their promise.

The Four Most Common Types of Complex Analytics Questions

Business questions are rarely trivial – they often involve multiple criteria, comparisons, or sequential logic. Shallow reasoning AI analytics agents (often using text-to-SQL) tend to break down such questions into simpler sub-queries, answer them independently, and then attempt to stitch together a response.

Think of a server who hears “I’d like a three-course meal: appetizer, main, and dessert” and then brings each course in random order because they treat each part as an isolated request - or, even worse, forgets to bring the main course. The intent was a cohesive meal, but the execution is fragmented. Similarly, shallow reasoning RAG-based agents (RAG = Retrieval-Augmented Generation) split a complex question into parts and retrieve or generate answers piecewise.

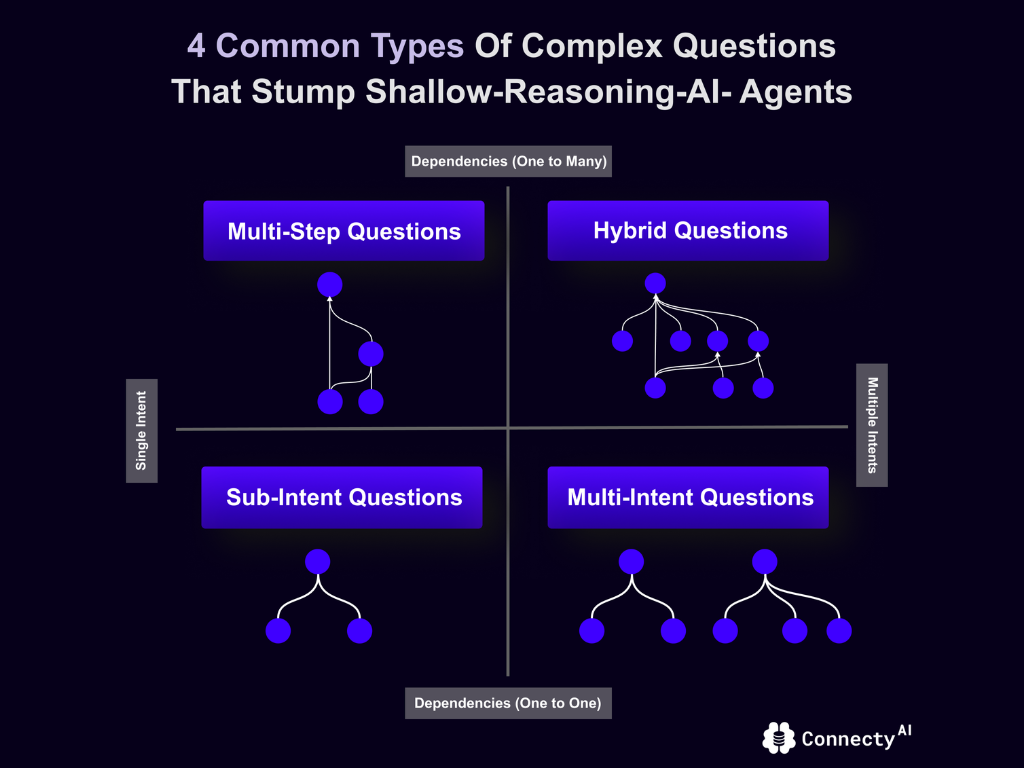

What kinds of questions typically trip up AI? We can broadly categorize them into four overlapping types (illustrated in the quadrant chart below). These represent the Four Most Common Types of Complex Analytics Questions that AI Agents must handle:

1. Sub-Intent Questions

Sub-intent occurs when a query contains a main intent with secondary, dependent sub-questions, but these sub-questions still belong to the broader main intent.

Types of Sub-Intent Queries:

a) Sub-intent is derived, e.g., Metrics

Example: "Calculate the supplier reliability ratio for the top 5 suppliers." Here, "supplier reliability ratio" itself must be defined and computed dynamically, often requiring sub-queries like:

- "Total on-time deliveries vs. total deliveries per supplier" (Core metric for reliability)

- "Identify the top 5 suppliers based on order volume" (Filter only top performers before calculating reliability)

AI agents may fail to:

- Derive what constitutes the definition of metric "supplier reliability", failing to correctly filter the top 5 suppliers before calculating the ratio

- Execute sub-intents in the correct order (first, identify top suppliers; then, compute the reliability metric)

b) Sub-intent is clarified in the question

Example: "Show the total revenue per supplier. Then, break it down by year and region. Also, list the top-selling part per region."

AI Agents may fail to process this as a unified question and process it as separate questions:

- "Total revenue per supplier"

- "Revenue breakdown by year and region"

- "Top-selling part per region"

- "Supplier and revenue data" (irrelevant generalization)

- "Yearly and regional revenue data" (context misunderstood)

2. Multi-Intent Questions

Multi-intent means the question contains separate, independent requests that could each be answered stand-alone.

Example: "How many items did we ship yesterday? And what was the average order value yesterday?"

AI Agents may fail to:

- Recognize the independence of each query component, sometimes trying to force relationships that don't exist or ignore one of the parts.

3. Multi-Step Questions

Multi-step means the question has dependent intermediate steps, i.e., A question that entails sequential steps, where the answer to the first part is used in the next to reach the final result. There are two scenarios here:

a) Requires understanding the structure and expected output of the previous query to build upon it

Examples: "How many items did we ship yesterday? Cluster those items by categories based on their descriptions e.g., necklace as jewelry."

b) Only requires incorporating the logical structure of the first part into subsequent queries

Example: "Find the most ordered part by each supplier. Then, group suppliers by region and show the region with the highest order volume."

AI Agents may fail to:

- Connect query structures between steps, i.e., understanding how to reference or build upon the logical structure of a previous query.

- Apply intermediate transformations (e.g., needing to incorporate conditional logic based on the structure of previous query components).

4. Hybrid Questions

The most complex queries, which mix multiple sub-intents, multi-intents, and multi-step logic together. These might have several sub-intents and independent intents intertwined.

Example: "Show how many items we shipped yesterday. Also, show the total revenue from those shipments. Then, cluster those items by category based on their descriptions."

AI Agents may fail to:

- Dynamically inherit contextual filters (e.g., regional segmentation) across steps, executing queries as isolated blocks, and producing incomplete data.

These complex question structures are not rare – in fact, they mirror how real business queries are asked. Research on conversational BI has shown that in reality, complex questions are usually answered through interactive exchanges people tend to ask multiple interrelated questions rather than a single perfectly formed query. In other words, the questions that unlock massive enterprise value are inherently complex. Unfortunately, today’s agents often mishandle them. Next, we examine the failure modes in detail.

Failures: From Intent Confusion to Invalid Results

Shortcomings of shallow reasoning AI Agents

When faced with multi-layer or multi-intent questions, shallow reasoning systems encounter four common failures:

Failure #1: Context Fragmentation and Its Impact on Analysis Quality

One of the most common failure modes is context fragmentation. This happens when an AI agent fails to carry over a filter or condition from one part of a question to another, effectively answering a different question than asked. The agent treats each piece of the query in isolation, so it “forgets” the context established by earlier parts.

Example input: "Calculate the average order value for our top 10 customers, then break it down by product category."

What happens internally? This is a classic sub-intent question. There’s one main intent (average order value, AOV), but it’s qualified: first find the top 10 customers, then within that filtered set, calculate AOV by category. Most text-to-SQL agent will often chunk this into two separate queries:

- Query 1: Find the top 10 customers by total spend.

Query 2: Calculate average order value by product category.

This leads to semantically disconnected SQL that either:

- The agent executes Query 2 for all customers by category because it lost the filter context.

- The outcome is an incorrect result that doesn’t answer the business question.

Example using TPC-H dataset:

1## What AI agent may generate (INCORRECT):

2## Query 1: Gets top 10 customers

3SELECT c_custkey, c_name, SUM(o_totalprice) as total_spent

4FROM customer

5JOIN orders ON c_custkey = o_custkey

6GROUP BY c_custkey, c_name

7ORDER BY total_spent DESC

8LIMIT 10;

9

10## Query 2: Calculates AOV by category (WITHOUT applying top customer filter)

11SELECT p_type, AVG(l_extendedprice * (1-l_discount)) as avg_order_value

12FROM lineitem

13JOIN part ON l_partkey = p_partkey

14GROUP BY p_type

15ORDER BY avg_order_value DESC;Conclusion #1: The second query completely loses the context that we only want this analysis for the top 10 customers! Solving this requires context propagation – the ability to maintain state (filters, conditions) throughout multi-step queries

Failure #2: Intent Hierarchy Confusion — One Intent or Many?

Most AI Agents struggle to distinguish between:

- Sub-intents (dependent components of a main question)

- Multi-intents (separate, independent questions)

- Multi-step logic (sequential queries where later steps depend on earlier ones)

In other words, the agent cannot discern which parts of the query are sub-intents of a main question and which parts are independent intents. This often leads to the AI either splitting what should stay together or lumping together what should be separate. Hybrid questions are where all the shortcomings of an agent are exposed.

Example: User: "Show our monthly revenue trend for Q1. Also, identify customers who have increased spending by >20% compared to last quarter. For these growth customers, analyze their product preferences." This query has multiple components:

- Overall monthly revenue trend for Q1 (one intent),

- Finding “growth” customers (second intent),

- Analyzing those customers’ product preferences (third intent).

Critically, parts (2) and (3) are linked (a multi-step sub-analysis on the same subset of customers), while part (1) is separate (an independent overview).

Most AI Agents will misinterpret this as…

- Either three separate, unrelated questions (losing the connection between growth customers and product preferences)

- Or, a single monolithic query (attempting to force all components into one SQL statement)

Technical problem: Both of these outcomes are wrong. The AI agent lacks a formalized intent parsing model to recognize that:

- The question contained two main intents (the revenue trend, and the customer analysis),

- And that within the customer analysis, there was a sub-intent dependency (find customers, then analyze them)

Conclusion #2 shallow reasoning AI agents struggle with intent hierarchy confusion, treating multi-layered business questions as monolithic or isolated prompts. This failure to parse nested sub-intents or contextual dependencies results in disjointed SQL outputs, overlooking critical relationships between segmented queries and undermining analytical precision.

Failure #3: Single-Pass SQL Generation — Lacking Granular Self-Refinement for Multi-Step Analysis

Even when an AI agent correctly interprets the structure of a question, it can fail in how it executes the solution. Most AI Agents use a "generate-once" approach—they produce SQL without considering how intermediate results might affect subsequent queries. This approach is inherently brittle for multi-step analytics.

Example problem: "Find products with unusual sales patterns last month, then analyze customer demographics for purchasers of these specific products."

The shallow reasoningAI Agent has no mechanism to:

- Generate the first query to identify which products had unusual sales (this likely involves computing some metrics or detecting anomalies per product)

- Review the results (e.g., which products show unusual patterns) – the specific list of products – to filter the customer data and analyze demographics of buyers of those products.

Conclusion #3: It lacks the ability to iterate and Self-refine. Without a dynamic execution engine that can read intermediate results, the AI must guess at what the first query might return, leading to imprecise or invalid filtering in subsequent steps.

Failure #4: Metric Extraction Mistakes

The most stubborn failure mode appears when the agent lacks a governed semantic layer or rich metadata intelligence. Interpreting metrics (quantitative measures) and dimensions (categorical or descriptive attributes) from the intent is one of the hardest challenges often resulting in queries that are technically valid but semantically irrelevant.

Business stakeholders phrase questions in natural language (“monthly sales”, “average revenue”), but an agent without contextual knowledge will latch onto whichever column names look similar in any pre-aggregated table, often producing syntactically correct yet semantically wrong SQL. This increases manual validation effort and introduces silent errors—especially once table joins enter the picture.

Four broad failure patterns keep surfacing in absence of a reliable semantic layer:

- Conflicting metric name – A single term like “sales” can map to several formulas e.g. count of items sold, or sum of price of item sold; lacking context, the agent picks one at random.

- Granularity mismatch – The agent sums a header-level amount even though the correct metric lives at line-item granularity.

- Measure-type confusion – It treats absolute values and percentage rates as interchangeable, aggregating each the same way.

- Wrong ranking key – When asked for “top” entities, it orders by total value even though the business cares about usage frequency (or vice-versa).

Having dissected the common failure modes, we see a clear pattern. The shallow reasoning AI Agents approach lacks a robust internal representation of intent and context. They operate like black boxes that map a question to an answer, without reasoning in a human-like way about what the question means and how to break it down. As a result, they struggle with complex, multi-layered questions that require a bit of planning, memory, and clarification – in other words, exactly the kind of questions that yield massive enterprise value when answered correctly.

Benchmarking Your AI Analytics Agent

To test if your AI tool can handle complex reasoning, here are some examples of questions from the TPC-H sample dataset:

Test Query #1: Sub-Intent with multiple aggregations

"I need a breakdown of our orders. How many orders were placed in each country and region? I’d also like to see the total revenue from those orders, grouped by month. On top of that, can we include the most frequently ordered part for each country-region combo? Let’s sort everything so the most recent months show up first."

1with

2 part_ranking as (

3 select

4 r.r_regionkey,

5 n.n_nationkey,

6 format_date('%Y-%m', o.o_orderdate) as year_month,

7 p.p_name as part_name,

8 sum(l.l_quantity) as part_quantity

9

10 from

11 `connecty-ai.tpch_tpch_sf1.orders` as o

12 inner join `tpch_tpch_sf1.lineitem` as l

13 on o.o_orderkey = l.l_orderkey

14 inner join `tpch_tpch_sf1.part` as p

15 on l.l_partkey = p.p_partkey

16 inner join `connecty-ai.tpch_tpch_sf1.customer` as c

17 on o.o_custkey = c.c_custkey

18 inner join `connecty-ai.tpch_tpch_sf1.nation` as n

19 on c.c_nationkey = n.n_nationkey

20 inner join `connecty-ai.tpch_tpch_sf1.region` as r

21 on n.n_regionkey = r.r_regionkey

22 group by

23 1, 2, 3, 4

24 qualify

25 dense_rank() over (partition by r.r_regionkey, n.n_nationkey, year_month order by part_quantity desc) = 1

26 ),

27

28 orders_summary as (

29 select

30 r.r_regionkey,

31 n.n_nationkey,

32 r.r_name as region,

33 n.n_name as country,

34 format_date('%Y-%m', o.o_orderdate) as year_month,

35 count(distinct o.o_orderkey) as number_of_orders,

36 sum(l.l_extendedprice * (1 - l.l_discount)) as revenue

37 from

38 `connecty-ai.tpch_tpch_sf1.orders` as o

39 inner join `tpch_tpch_sf1.lineitem` as l

40 on o.o_orderkey = l.l_orderkey

41 inner join `connecty-ai.tpch_tpch_sf1.customer` as c

42 on o.o_custkey = c.c_custkey

43 inner join `connecty-ai.tpch_tpch_sf1.nation` as n

44 on c.c_nationkey = n.n_nationkey

45 inner join `connecty-ai.tpch_tpch_sf1.region` as r

46 on n.n_regionkey = r.r_regionkey

47 group by

48 1, 2, 3, 4, 5

49 )

50

51select

52 os.region,

53 os.country,

54 os.year_month,

55 os.number_of_orders,

56 os.revenue,

57 pr.part_name,

58 pr.part_quantity

59

60from

61 orders_summary as os

62 inner join part_ranking as pr

63 on os.r_regionkey = pr.r_regionkey

64 and os.n_nationkey = pr.n_nationkey

65 and os.year_month = pr.year_month

66order by

67 os.year_month desc

68

Test Query #2: Multi-Step With Dynamic Filtering

"I want to analyze monthly customer profitability by country-region combination. For each country-region show:

- Total revenue (sum of order values after discount)

- Total discount given

- Total supply cost

- Most used supplier (who supplied the most items for a given country-region-month)

- Top product category by revenue

- Average order size (number of items per order)

- Sort by year-month in descending order."

1WITH

2 deduped_partsupp AS (

3 SELECT

4 ps_partkey,

5 ps_suppkey,

6 ps_supplycost

7 FROM

8 `tpch_tpch_sf1.partsupp`

9 QUALIFY ROW_NUMBER() OVER (PARTITION BY ps_partkey, ps_suppkey) = 1

10 ),

11

12 order_summary AS (

13 SELECT

14 FORMAT_DATE('%Y-%m', o.o_orderdate) AS year_month,

15 n.n_nationkey,

16 r.r_regionkey,

17 r.r_name AS region,

18 n.n_name AS country,

19 o.o_orderkey,

20 SUM(l.l_extendedprice * (1 - l.l_discount)) AS order_revenue,

21 SUM(l.l_extendedprice * l.l_discount) AS order_discount,

22 SUM(l.l_quantity) AS order_number_of_items,

23 SUM(ps.ps_supplycost * l.l_quantity) AS order_supply_cost

24 FROM

25 `connecty-ai.tpch_tpch_sf1.orders` AS o

26 INNER JOIN `tpch_tpch_sf1.lineitem` AS l

27 ON o.o_orderkey = l.l_orderkey

28 INNER JOIN deduped_partsupp AS ps

29 ON l.l_partkey = ps.ps_partkey

30 AND l.l_suppkey = ps.ps_suppkey

31 INNER JOIN `connecty-ai.tpch_tpch_sf1.customer` AS c

32 ON o.o_custkey = c.c_custkey

33 INNER JOIN `connecty-ai.tpch_tpch_sf1.nation` AS n

34 ON c.c_nationkey = n.n_nationkey

35 INNER JOIN `connecty-ai.tpch_tpch_sf1.region` AS r

36 ON n.n_regionkey = r.r_regionkey

37 GROUP BY

38 1, 2, 3, 4, 5, 6

39 ),

40

41 supplier_rank AS (

42 SELECT

43 FORMAT_DATE('%Y-%m', o.o_orderdate) AS year_month,

44 n.n_nationkey,

45 r.r_regionkey,

46 s.s_name AS most_used_supplier,

47 SUM(l.l_quantity) AS number_of_items_supplied

48 FROM

49 `connecty-ai.tpch_tpch_sf1.orders` AS o

50 INNER JOIN `tpch_tpch_sf1.lineitem` AS l

51 ON o.o_orderkey = l.l_orderkey

52 INNER JOIN `tpch_tpch_sf1.supplier` AS s

53 ON l.l_suppkey = s.s_suppkey

54 INNER JOIN `connecty-ai.tpch_tpch_sf1.customer` AS c

55 ON o.o_custkey = c.c_custkey

56 INNER JOIN `connecty-ai.tpch_tpch_sf1.nation` AS n

57 ON c.c_nationkey = n.n_nationkey

58 INNER JOIN `connecty-ai.tpch_tpch_sf1.region` AS r

59 ON n.n_regionkey = r.r_regionkey

60 GROUP BY

61 1, 2, 3, 4

62 QUALIFY

63 DENSE_RANK() OVER (

64 PARTITION BY

65 year_month, n.n_nationkey, r.r_regionkey

66 ORDER BY

67 number_of_items_supplied DESC

68 ) = 1

69 ),

70

71 product_rank AS (

72 SELECT

73 FORMAT_DATE('%Y-%m', o.o_orderdate) AS year_month,

74 n.n_nationkey,

75 r.r_regionkey,

76 p.p_type AS top_product_category,

77 SUM(l.l_extendedprice * (1 - l.l_discount)) AS product_category_revenue

78 FROM

79 `connecty-ai.tpch_tpch_sf1.orders` AS o

80 INNER JOIN `tpch_tpch_sf1.lineitem` AS l

81 ON o.o_orderkey = l.l_orderkey

82 INNER JOIN `tpch_tpch_sf1.part` AS p

83 ON l.l_partkey = p.p_partkey

84 INNER JOIN `connecty-ai.tpch_tpch_sf1.customer` AS c

85 ON o.o_custkey = c.c_custkey

86 INNER JOIN `connecty-ai.tpch_tpch_sf1.nation` AS n

87 ON c.c_nationkey = n.n_nationkey

88 INNER JOIN `connecty-ai.tpch_tpch_sf1.region` AS r

89 ON n.n_regionkey = r.r_regionkey

90 GROUP BY

91 1, 2, 3, 4

92 QUALIFY

93 DENSE_RANK() OVER (

94 PARTITION BY

95 year_month, n.n_nationkey, r.r_regionkey

96 ORDER BY

97 product_category_revenue DESC

98 ) = 1

99 )

100

101SELECT

102 os.year_month,

103 os.region,

104 os.country,

105 SUM(os.order_revenue) AS total_revenue,

106 SUM(os.order_discount) AS total_discount,

107 AVG(os.order_number_of_items) AS average_order_size,

108 SUM(os.order_supply_cost) AS total_supply_cost,

109 sr.most_used_supplier,

110 pr.top_product_category

111FROM

112 order_summary AS os

113 INNER JOIN supplier_rank AS sr

114 ON os.year_month = sr.year_month

115 AND os.n_nationkey = sr.n_nationkey

116 AND os.r_regionkey = sr.r_regionkey

117 INNER JOIN product_rank AS pr

118 ON os.year_month = pr.year_month

119 AND os.n_nationkey = pr.n_nationkey

120 AND os.r_regionkey = pr.r_regionkey

121GROUP BY

122 1, 2, 3, 8, 9

123ORDER BY

124 1 DESC;

Test Query #3: Hybrid Intent Graph

"I need a breakdown of our suppliers. Specifically, I want to know how much revenue each supplier generates, which customers contribute the most to their revenue, and what product categories they sell the most. Also, can we calculate what percentage of a supplier’s revenue comes from its top customers? Finally, I’d like to see each supplier’s market share compared to the total revenue across all suppliers. Let’s sort by the biggest suppliers first."

1WITH

2 supplier_revenue AS (

3 SELECT

4 s.s_suppkey,

5 s.s_name,

6 SUM(l.l_extendedprice * (1 - l.l_discount)) AS total_revenue

7 FROM

8 `connecty-ai.tpch_tpch_sf1.lineitem` AS l

9 JOIN `connecty-ai.tpch_tpch_sf1.supplier` AS s ON l.l_suppkey = s.s_suppkey

10 GROUP BY

11 s.s_suppkey,

12 s.s_name

13 ),

14 customer_contribution AS (

15 SELECT

16 l.l_suppkey,

17 o.o_custkey,

18 c.c_name as customer_name,

19 SUM(l.l_extendedprice * (1 - l.l_discount)) AS customer_revenue

20 FROM

21 `connecty-ai.tpch_tpch_sf1.lineitem` AS l

22 JOIN `connecty-ai.tpch_tpch_sf1.orders` AS o ON l.l_orderkey = o.o_orderkey

23 JOIN `connecty-ai.tpch_tpch_sf1.customer` AS c ON o.o_custkey = c.c_custkey

24 GROUP BY

25 l.l_suppkey,

26 o.o_custkey,

27 c.c_name

28 ),

29 product_categories AS (

30 SELECT

31 ps.ps_suppkey,

32 p.p_type,

33 COUNT(*) AS category_count

34 FROM

35 `connecty-ai.tpch_tpch_sf1.partsupp` AS ps

36 JOIN `connecty-ai.tpch_tpch_sf1.part` AS p ON ps.ps_partkey = p.p_partkey

37 GROUP BY

38 ps.ps_suppkey,

39 p.p_type

40 qualify

41 dense_rank() over (partition by ps.ps_suppkey order by category_count desc) = 1

42 ),

43 top_customers AS (

44 SELECT

45 l_suppkey,

46 o_custkey,

47 customer_name,

48 customer_revenue

49 FROM

50 customer_contribution

51 qualify

52 dense_rank() over (partition by l_suppkey order by customer_revenue desc) = 1

53 ),

54 supplier_market_share AS (

55 SELECT

56 s.s_suppkey,

57 s.s_name,

58 total_revenue,

59 (total_revenue / SUM(total_revenue) OVER ()) * 100 AS market_share

60 FROM

61 supplier_revenue AS s

62 )

63SELECT

64 sr.s_suppkey,

65 sr.s_name,

66 sr.total_revenue,

67 sm.market_share,

68 tc.o_custkey AS top_customer_key,

69 tc.customer_name as top_customer_name,

70 tc.customer_revenue AS top_customer_revenue,

71 (tc.customer_revenue / sr.total_revenue) * 100 AS top_customer_revenue_percentage,

72 pc.p_type AS top_product_category,

73 pc.category_count as top_category_count

74FROM

75 supplier_revenue AS sr

76 JOIN supplier_market_share AS sm ON sr.s_suppkey = sm.s_suppkey

77 LEFT JOIN top_customers AS tc ON sr.s_suppkey = tc.l_suppkey

78 LEFT JOIN product_categories AS pc ON sr.s_suppkey = pc.ps_suppkey

79ORDER BY

80 sr.total_revenue DESC;

81

If your AI agent can correctly maintain context and dependencies across all components of these questions, congratulations—you have a surprisingly advanced tool! But more likely, you'll see context loss, flattened analyses, or attempts to force everything into monolithic queries that miss key analytical nuances.

Edge Cases & Explainability in Query Execution

Some questions simply can't be answered with available data, or may require human input.

Example: A business analyst is investigating supplier performance and product profitability, but these two metrics reside in completely separate data models with no natural join key, or when one part of a multi-step query fails due to missing information.

"How many suppliers delivered at least 1,000 units last quarter? Also, what is the average profit margin for products that had more than 500 orders?"

Effective AI systems need explainability layers to identify these scenarios, clearly communicate limitations, and suggest alternatives or additional data requirements.

The Future of AI Agents: Complex Reasoning Business Questions

The next generation of AI agents must bridge the reasoning gap through:

- Intent parsing systems designed specifically for analytical questions

- Dependency graph construction as a formalized intermediate representation

- Dynamic execution planning that adapts to intermediate results

- Multi-agent architectures with specialized reasoning components

A New Approach: Intent Graphs for Complex Questions

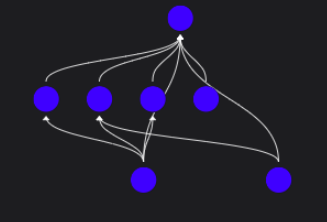

To overcome these limitations, Connecty AI introduces Intent Graph architecture. Instead of relying solely on simplistic intent breakdown, Connecty’s Autonomous Agentic AI’s Deep Reasoning system builds a graph of the user’s intent – a structured representation of the question that explicitly maps out sub-intents and dependencies.

In Connecty’s approach, a user question is no longer treated as a flat string to parse in one go. It’s treated as a problem to solve, and the agent thinks more like a human analyst. It breaks the question into interconnected nodes of computation, where each node is a specific analytical task - exploring every possible solution autonomously. The result is a graph structure that mirrors the question’s intent structure.

How Connecty AI Solves Complex Questions That Unlock Massive Enterprise Value

Connecty AI is an Agentic AI platform for structured data’s intelligence - powered by Autonomous Deep Reasoning. It understands the messy data in your enterprise data warehouse (e.g. Snowflake, Databricks, BigQuery, PostgreSQL), and generates live metadata catalog intelligence (without transferring any sensitive data) through its powerful Context Engine offering. Connecty’s Agentic AI offers various data solutions using simple natural language, like analytics, discovery, and query refinement.

Its Agentic AI is especially designed to tackle complex questions - that other AI agents cannot. It leverages a unique Intent Graph Architecture which decomposes complex business questions into computational graphs. These graphs support 1:many dependencies, context preservation, and dynamic execution planning. Connecty’s Autonomous Agentic AI maintains dynamic context across the reasoning chain while ensuring Actionable Explainability. It provides transparent, step-by-step audit trails showing how conclusions were reached, allowing business users to trust and verify every step of the analytical process.

Unlock the full power of your enterprise data with next-gen Agentic AI for structured data

- Intent Graph Architecture

- Transforms complex questions into structured graphs, where each node represents a sub-intent and each edge captures explicit dependencies

- Ensures correct execution order across deeply nested, multi-step analytical flows

- Live Context Fetching

- Maintains stateful awareness across every reasoning step by carrying forward filters, parameters, and intermediate results.

- Guarantees that no context is lost across branches or iterations of a query—crucial for accurate, multi-hop analysis.

- Dynamic Semantic Layer-Driven Metric Intelligence

- Extracts accurate metric expressions from natural language questions—generating the correct aggregation logic (e.g., sum, average), subject entity (e.g., revenue, orders), and associated dimensions (e.g., by product, per month)—powered by deep context intelligence

- Infers precise join relationships using metadata catalog intelligence, enabling the system to automatically determine how sub-intents relate without manual modeling.

- Extracts accurate metric expressions from natural language questions—generating the correct aggregation logic (e.g., sum, average), subject entity (e.g., revenue, orders), and associated dimensions (e.g., by product, per month)—powered by deep context intelligence

- Explainability Layer

- Visual Traceability: Each question is mapped visually to an Intent Graph or Dynamic Semantic Layer, offering a clear, step-by-step view of how sub-questions and logic are connected.

- Actionable Explainability: Users can interact with explanations, simulate alternatives, and receive real-time recommendations—enabling continuous improvement, not just observation.

Conclusion: Agentic AI—That Interprets in Business Context, Not Just Syntax

Most AI Agents were built as straightforward question-to-SQL translators, focusing on syntax over semantics. But, without a paradigm shift, such shallow reasoning AI agents will continue to deliver partial insights and require humans to fill in the gaps. The path forward is Agentic AI that thinks more like a human analyst: maintaining context, breaking problems into sub-problems, asking clarifying questions, and leveraging domain knowledge.

An Intent Graph based Agentic architecture embodies this by providing a structured thinking process under the hood of a natural language interface. It ensures that AI for data analysis can tackle the hard questions – those that span multiple data points, multiple steps, and multiple interpretations – thereby truly unlocking the promised AI-driven insights.

For data analysts, AI researchers, and enterprise technology leaders, the takeaway is to evaluate AI analytics solutions based on these reasoning capabilities. Does the tool carry context across turns? Can it explain how it got an answer? Does it handle multi-intent queries gracefully? By understanding the failure modes outlined here, stakeholders can ask the right questions when assessing enterprise NLP solutions. The goal should be to move beyond a simplistic Q&A bot to a partner that can engage in complex analytical conversations.

Connecty’s Intent Graph approach is one example of this new breed of solution. It represents the convergence of generative AI with robust analytical frameworks – truly a next-gen analytics tool for the enterprise. With such technology, organizations can finally trust an AI assistant with their toughest questions and expect answers that are accurate, comprehensive, and contextual.

Ready to transform your analytics workflow? Book a Demo today to see how Connecty’s Intent Graph Agent can unlock insights hidden in your data and empower your team with reliable, conversational analytics.